sched-ext Tutorial

Extensible Scheduler Class (better known as sched-ext) is a Linux kernel feature which enables implementing kernel thread schedulers in

BPF (Berkeley Package Filter) and dynamically loading them. Essentially, this allows end-users to change their schedulers in userspace without

the need to build another kernel just to have a different scheduler.

-

The schedulers can be found in the

scx-schedsandscx-scheds-gitpackage.Terminal window # Stable branch + scx_loader and scxctl tools.sudo pacman -S scx-scheds scx-tools# Bleeding edge branch (This branch includes the latest changes from the master branch.) + scx_loader and scxctl tools.sudo pacman -S scx-scheds-git scx-tools-git

How to Launch and Manage the Scheduler

Section titled “How to Launch and Manage the Scheduler”- To start the scheduler, open your terminal and enter the following command:

Example of starting rusty sudo scx_rusty

This will launch the rusty scheduler and detach the default scheduler.

To stop the scheduler. Press CTRL + C and the scheduler will then be stopped and the default kernel scheduler will take over again.

scxctl is a CLI DBUS client for interacting with scx_loader.

- Features:

- Get the current scheduler and mode

- List all available schedulers

- Start a scheduler in a given mode, or with given arguments

- Switch between schedulers and modes

- Stop the running scheduler

- Restart the running scheduler

scxctl start --sched flash --mode gamingscxctl stopscxctl restorescxctl switch --sched bpfland --mode gamingscxctl start --sched cosmos --args="-c,75,-m,0-15"scxctl switch --sched flash --args="-s,20000"$ scxctl --helpUsage: scxctl <COMMAND>

Commands: get Get the info on the running scheduler list List all supported schedulers start Start a scheduler in a mode or with arguments switch Switch schedulers or modes, optionally with arguments stop Stop the current scheduler restart Restart the current scheduler with original configuration restore Restore the default scheduler from configuration help Print this message or the help of the given subcommand(s)

Options: -h, --help Print help -V, --version Print versionAs the name implies, it is a utility that functions as a loader and manager for the sched-ext framework using the D-Bus interface.

While it does not require systemd, it can still be utilized in conjunction with it. Check the transition guide for reference.

- Has the ability to stop, start, restart, read information about a scx scheduler and more.

- You can use tools like

dbus-sendorgdbusto communicate with it.

- You can use tools like

- This guide explains how to use scx_loader with the dbus-send command.

-

Starting scx_rusty with its default arguments dbus-send --system --print-reply --dest=org.scx.Loader /org/scx/Loader org.scx.Loader.StartScheduler string:scx_rusty uint32:0 -

Starting a scheduler with arguments # This example starts scx_bpfland with the following flags: -k -c 0dbus-send --system --print-reply --dest=org.scx.Loader /org/scx/Loader org.scx.Loader.StartSchedulerWithArgs string:scx_bpfland array:string:"-k","-c","0" -

Stopping the currently running scheduler dbus-send --system --print-reply --dest=org.scx.Loader /org/scx/Loader org.scx.Loader.StopScheduler -

Switch to the default scheduler # scx_loader will switch to the default scheduler set in the scx_loader config filedbus-send --system --print-reply --dest=org.scx.Loader /org/scx/Loader org.scx.Loader.RestoreDefault -

Switching to another scheduler in Mode 2 dbus-send --system --print-reply --dest=org.scx.Loader /org/scx/Loader org.scx.Loader.SwitchScheduler string:scx_lavd uint32:2# This switches to scx_lavd with the scheduler mode 2 meaning it starts LAVD in powersaving -

Switching to another scheduler with arguments dbus-send --system --print-reply --dest=org.scx.Loader /org/scx/Loader org.scx.Loader.SwitchSchedulerWithArgs string:scx_bpfland array:string:"-k","-c","0" -

Getting the currently running scheduler dbus-send --system --print-reply --dest=org.scx.Loader /org/scx/Loader org.freedesktop.DBus.Properties.Get string:org.scx.Loader string:CurrentScheduler -

Getting a list of the supported schedulers dbus-send --system --print-reply --dest=org.scx.Loader /org/scx/Loader org.freedesktop.DBus.Properties.Get string:org.scx.Loader string:SupportedSchedulers

-

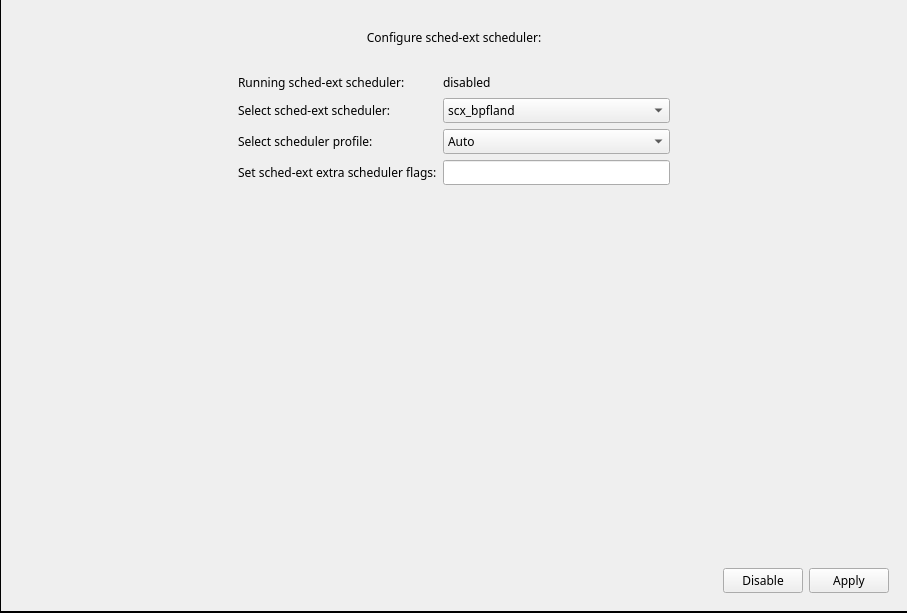

You can access and configure them via the sched-ext scheduler config button.

SCX Manager is a standalone GUI tool derived from the CachyOS Kernel Manager. It allows users to manage the sched-ext framework and its schedulers through the scx_loader.

Features:

- Check which scheduler is currently active

- Select a scheduler or profile: (Auto, Gaming, Power save, Low Latency or Server)

- Set additional flags

- Disable the current scheduler

Screenshot

Scheduler Guide: Profiles and Use Cases

Section titled “Scheduler Guide: Profiles and Use Cases”Since there are many schedulers to choose from, we want to give a little introduction about the schedulers in hand.

Feel free to report any issue or feedback to their scheduler repository.

Use scx_schedulername --help to see the available flags and a brief description of what they do.

scx_rusty --helpDeveloped by: Andrea Righi (arighi GitHub)

Production Ready?

A vruntime-based sched_ext scheduler that prioritizes interactive workloads. Highly flexible and easy to adapt.

Bpfland when making decisions on which cores to use, it takes in consideration their cache layout and which cores share the same L2/L3 cache leading to fewer cache misses = more performance.

- Use cases:

- Gaming

- Desktop usage

- Multimedia/Audio production

- Great interactivity under intensive workloads

- Power saving

- Server

Scheduler Modes

Section titled “Scheduler Modes”Low Latency

Section titled “Low Latency”- Command-line Flags:

-m performance -w - Description: Meant to lower latency at the cost of throughput. Suitable for soft real-time applications like Audio Processing and Multimedia.

Power Save

Section titled “Power Save”- Command-line Flags:

-s 20000 -m powersave -I 100 -t 100 - Description: Prioritizes power efficiency. Favors less performant cores (e.g E-cores on Intel).

Server

Section titled “Server”- Command-line Flags:

-s 20000 -S - Description: Prioritize tasks with strict affinity. This option can increase throughput at the cost of latency and it is more suitable for server workloads.

Developed by: Andrea Righi (arighi GitHub)

Production Ready?

scx_beerland is a scheduler designed to prioritize locality and scalability.

Prioritizes keeping tasks on the same CPU to maintain cache locality, while also ensuring good scalability across many CPUs by using local DSQs (per-CPU runqueues) when the system is not saturated.

- Use cases:

- Cache-intensive workloads

- Systems with a large amount of CPUs

- Gaming: Its known to work surprinsingly well in certain games, although your mileage may vary

- Server: Good for general purpose server workloads due to its scalability and locality optimizations.

- Can be used for desktop usage as well.

Scheduler Modes

Section titled “Scheduler Modes”None for the moment.

Developed by: Andrea Righi (arighi GitHub)

Production Ready?

For performance-critical production scenarios, other schedulers are likely to exhibit better performance, as offloading all scheduling decisions to user-space comes with a certain cost (even if it’s minimal).

However, a scheduler entirely implemented in user-space holds the potential for seamless integration with sophisticated libraries, tracing tools, external services (e.g., AI), etc.

Hence, there might be situations where the benefits outweigh the overhead, justifying the use of this scheduler in a production environment.

Shares similarities with bpfland, Made with the intention of being easy to read and understand how it works due to its implementation in userspace.

Keep in mind that there is a slight throughput disadvantage when using a userspace scheduler.

- Use cases:

- Low latency workloads (Gaming, video conferences and live streaming)

- Desktop usage

Developed by: Andrea Righi (arighi GitHub)

Production Ready?

A scheduler that focuses on ensuring fairness among tasks and performance predictability.

It operates using an earliest deadline first (EDF) policy, where each task is assigned a “latency” weight. This weight is dynamically adjusted based on how often a task release the CPU before its full time slice is used.

Tasks that release the CPU early are given a higher latency weight, prioritizing them over tasks that fully consume their time slice.

- Use cases:

- Gaming

- Latency sensitive workloads such as multimedia or real-time audio processing

- Need for responsiveness under over-stressed situations

- Consistency in performance

- Server

Scheduler Modes

Section titled “Scheduler Modes”Low Latency

Section titled “Low Latency”- Command-line Flags:

-m performance -w -C 0 - Description: Meant to lower latency at the cost of throughput. Suitable for soft real-time applications like Audio Processing and Multimedia.

Gaming

Section titled “Gaming”- Command-line Flags:

-m all - Description: Optimizes for high performance in games.

Power Save

Section titled “Power Save”- Command-line Flags:

-m powersave -I 10000 -t 10000 -s 10000 -S 1000 - Description: Prioritizes power efficiency. Favor less performant cores (e.g., E-cores on Intel) and introduces a forced idle cycle every 10ms to increase power saving.

Server

Section titled “Server”- Command-line Flags:

-m all -s 20000 -S 1000 -I -1 -D -L - Description: Tuned for server workloads. Trades responsiveness for throughput.

Developed by: Andrea Righi (arighi GitHub)

- Production Ready?

Lightweight scheduler optimized for preserving task-to-CPU locality.

When the system is not saturated, the scheduler prioritizes keeping tasks on the same CPU using local DSQs. This not only maintains locality but also reduces locking contention compared to shared DSQs, enabling good scalability across many CPUs.

- Use cases:

- General-purpose scheduler: the scheduler should adapt itself both for server workloads or desktop workloads.

Scheduler Modes

Section titled “Scheduler Modes”- Command-line Flags:

-d - Description: Disables deferred wakeups. Reduces throughput and performance for certain workloads while decreasing power consumption.

Gaming

Section titled “Gaming”- Command-line Flags:

-c 0 -p 0 - Description: Disable CPU load tracking and always enforce deadline-based scheduling to improve responsiveness.

Power Save

Section titled “Power Save”- Command-line Flags:

-m powersave -d -p 5000 - Description: Prioritizes power efficiency. Favor less performant cores (e.g., E-cores on Intel) and disables deferred wakeups, reducing throughput while increasing power efficiency. CPU load polling increased to 5ms.

Low Latency

Section titled “Low Latency”- Command-line Flags:

-m performance -c 0 -p 0 -w - Description: Meant to lower latency at the cost of throughput. Suitable for soft real-time applications like Audio Processing and Multimedia. Always enforce deadline-based scheduling and synchronous wake up optimizations to improve performance predictability.

Server

Section titled “Server”- Command-line Flags:

-s 20000 - Description: Enable address space affinity to improve locality and performance in certain cache-sensitive workloads. Polling increased to 20ms.

Developed by: Changwoo Min (multics69 GitHub).

- Production Ready?

Brief introduction to LAVD from Changwoo:

LAVD is a new scheduling algorithm which is still under development. It is motivated by gaming workloads, which are latency-critical and communication-heavy. It aims to minimize latency spikes while maintaining overall good throughput and fair use of CPU time among tasks.

- Use cases:

- Gaming

- Audio Production

- Latency sensitive workloads

- Desktop usage

- Great interactivity under intensive workloads

- Power saving

One of the main and awesome capabilities that LAVD includes is Core Compaction. which without going into technical details: When CPU usage < 50%, Currently active cores will run for longer and at a higher frequency. Meanwhile Idle Cores will stay in C-State (Sleep) for a much longer duration achieving less overall power usage.

Scheduler Modes

Section titled “Scheduler Modes”Gaming & Low Latency

Section titled “Gaming & Low Latency”- Command-line Flags:

--performance - Description: Maximizes performance by using all available cores, prioritizing physical cores.

Power Save

Section titled “Power Save”- Command-line Flags:

--powersave - Description: Minimizes power consumption while maintaining reasonable performance. Prioritizes efficient cores and threads over physical cores.

Developed by: David Vernet (Byte-Lab GitHub)

- Production Ready?

- Yes. If tuned correctly,

Rusty offers a wide range of features that enhance its capabilities, providing greater flexibility for various use cases. One of these features is tunability, allowing you to customize Rusty to suit your preferences and specific requirements.

- Use cases:

- Gaming

- Latency sensitive workloads

- Desktop usage

- Multimedia/Audio production

- Great interactivity under intensive workloads

- Power saving

- Production Ready?

- Yes. If tuned correctly for your specific workload and hardware.

Developed by: Daniel Hodges (hodgesds GitHub)

A general purpose scheduler that focuses on pick two load balancing between LLCs. Keeps high cache locality and work conservation while providing reasonable latency.

- Use cases:

- Server

- Desktop environments

- Gaming (with some manual tuning)

Scheduler Modes

Section titled “Scheduler Modes”Gaming

Section titled “Gaming”- Command-line Flags:

--task-slice true -f --sched-mode performance - Description: Improves consistency in gaming performance and increases bias towards scheduling on higher performance cores.

Low Latency

Section titled “Low Latency”- Command-line Flags:

-y -f --task-slice true - Description: Lowers latency by making interactive tasks stick more to the CPU they were assigned to and increasing the stability on slice time.

Power Save

Section titled “Power Save”- Command-line Flags:

--sched-mode efficiency - Description: Enhances power efficiency by prioritizing power efficient cores.

Server

Section titled “Server”- Command-line Flags:

--keep-running - Description: Improves server workloads by allowing tasks to run beyond their slice if the CPU is idle.

Developed by: Andrea Righi (arighi Github)

- Production Ready?

- This scheduler is still experimental and not recommended for production use.

scx_tickless is a server-oriented scheduler designed for cloud computing, virtualization, and high-performance computing workloads.

The scheduler works by routing all scheduling events through a pool of primary CPUs assigned to handle these events. This allows disabling the scheduler’s tick on other CPUs, reducing OS noise.

- Use cases:

- Cloud computing

- Virtualization

- High performance computing workloads

- Server

Scheduler Modes

Section titled “Scheduler Modes”Gaming

Section titled “Gaming”- Command-line Flags:

-f 5000 -s 5000 - Description: Boosts gaming performance by increasing how often the scheduler detects CPU contention and triggers context switches with a shorter time slice.

Power Save

Section titled “Power Save”- Command-line Flags:

-f 50 - Description: Enhances power efficiency by lowering contention checks.

Low Latency

Section titled “Low Latency”- Command-line Flags:

-f 5000 -s 1000 - Description: Similar to the gaming profile but with a further reduced slice.

Server

Section titled “Server”- Command-line Flags:

-f 100 - Description: Reduced how often the scheduler checks for CPU contention to improve throughput at the cost of responsiveness.

Configuration and performance testing

Section titled “Configuration and performance testing”LAVD Autopilot & Autopower

Section titled “LAVD Autopilot & Autopower”Quotes from Changwoo Min:

-

In autopilot mode, the scheduler adjusts its power mode

Powersave, Balanced, or Performancebased on the system load, specifically CPU utilization -

Autopower: Automatically decide the scheduler’s power mode based on the system’s energy profile aka EPP (Energy Performance Preference).

# Autopower can be activated by the following flag:--autopower# e.g:scx_lavd --autopowerananicy-cpp & sched-ext

Section titled “ananicy-cpp & sched-ext”In order to disable/stop ananicy-cpp, run the following command:

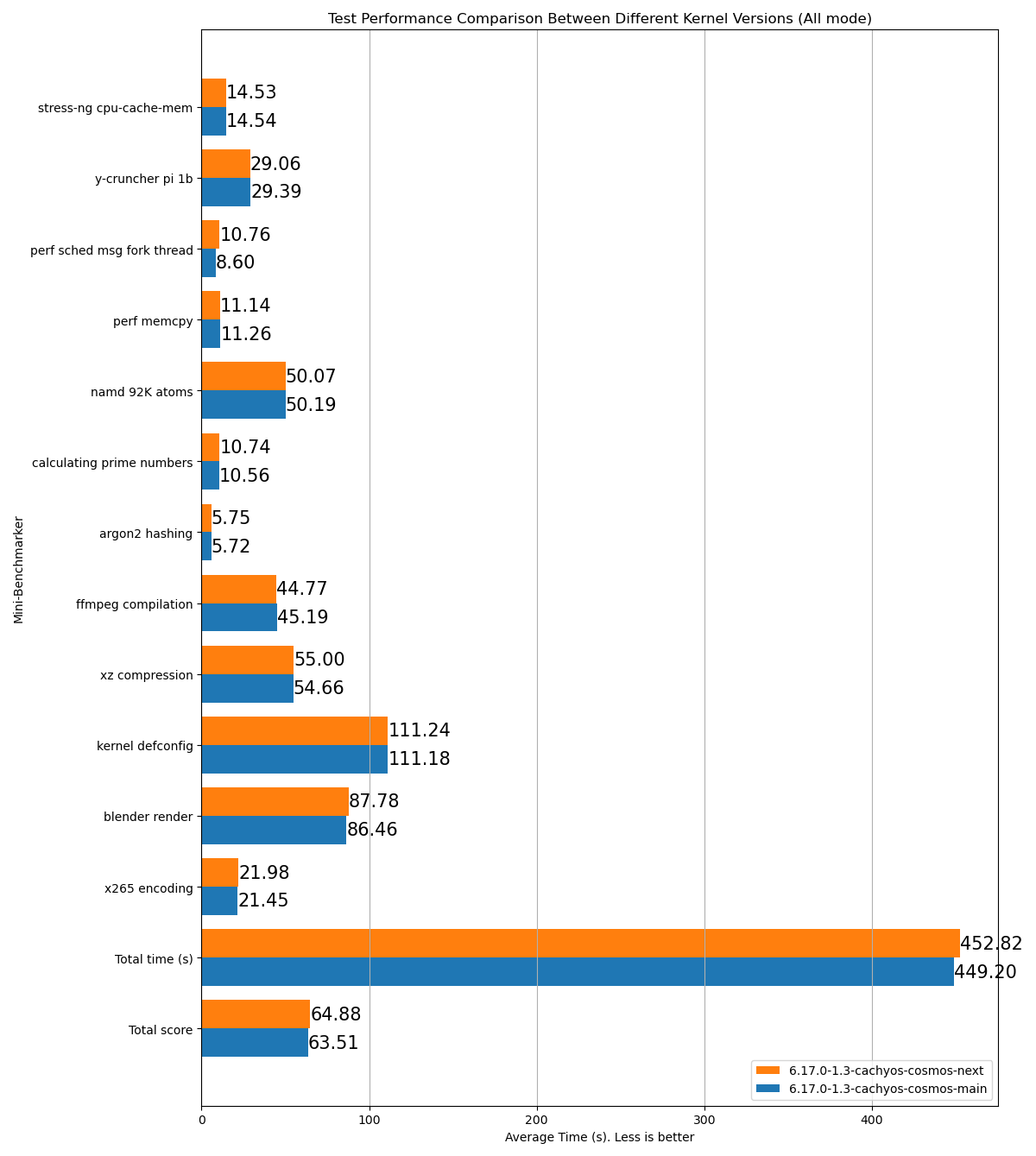

systemctl disable --now ananicy-cppBenchmarking and comparing schedulers with cachyos-benchmarker

Section titled “Benchmarking and comparing schedulers with cachyos-benchmarker”The cachyos-benchmarker tool provides an easy way to evaluate and compare the performance of different CPU schedulers.

It runs a comprehensive suite of benchmarks to measure CPU, memory, and overall system performance under various workloads.

The following benchmarks are included:

| Test | Measures | Tool |

|---|---|---|

| stress-ng cpu-cache-mem | CPU, cache, and memory performance | stress-ng |

| FFmpeg compilation | Parallel build performance | make |

| x265 encoding | Video encoding throughput | x265 |

| argon2 hashing | Multithreaded password hashing | argon2 |

| perf sched msg | Context switching and IPC performance | perf |

| perf memcpy | Memory throughput memcpy() | perf |

| prime calculation | Integer arithmetic and parallelism | primesieve |

| NAMD | Molecular dynamics (scientific workload) | namd3 |

| Blender render | CPU-only 3D rendering | blender |

| xz compression | Compression throughput | xz |

| Kernel defconfig build | Kernel compilation performance | make |

| y-cruncher | Mathematical precision and memory stress | y-cruncher |

cachyos-benchmarker can be used for several purposes, including:

- Testing scheduler stability

Run the full benchmark suite to detect stalls, crashes, or regressions introduced by scheduler changes.

If you are using

scx_loader, you can collect logs in case of a stall or crash with:This will create a file namedTerminal window journalctl --unit scx_loader.service --boot 0 > crash.logcrash.login your current directory. - Comparing scheduler performance

- Evaluate performance differences between schedulers. e.G.

BPFLAND vs LAVD

- Evaluate performance differences between schedulers. e.G.

- Measuring the effect of kernel or scheduler updates

- Compare runs before and after applying patches or version changes to check for performance regressions or improvements.

- Testing configuration tweaks

- Assess the impact of changes such as CPU governor settings, SMT toggling, or modified scheduler flags.

Requirements

Section titled “Requirements”- 4 GB RAM or more

- At least 8 GB of free storage space

- Time and patience - the full benchmark can take over an hour on slower systems

Installation

Section titled “Installation”To install cachyos-benchmarker, run the following command:

sudo pacman -S cachyos-benchmarkerRunning the benchmark

Section titled “Running the benchmark”- Execute

cachyos-benchmarker:Terminal window cachyos-benchmarker ~/cachyos-benchmarker/# You can replace ~/cachyos-benchmarker/ with any directory you want the logs to be saved in. - Wait until the preparation steps finish.

- Follow the prompts:

Do you want to drop page cache now? Root privileges needed! (y/N) yPlease enter a name for this run, or leave empty for default:

- Wait for the tests to finish.

- Once finished, the following will happen:

- Creation of a log file with name like

benchie_<name>_<DATE>.logwhich contains detailed information about the benchmark run.- Example:

benchie_p2dq_2025-09-29-2115.log - The

benchmark_scraper.pyscript will automatically execute to generate a summary report in HTML format. - What does the script do?:

- Reads all

benchie_*.logfiles in the specified directory. - Extracts the benchmark names, times, and scores.

- Sorts or aggregates them.

- Prints a clean summary of the results to your terminal and creates an HTML file that can be opened in a browser.

Terminal output example:

stress-ng cpu-cache-mem: 15.26y-cruncher pi 1b: 31.23perf sched msg fork thread: 8.892perf memcpy: 13.53namd 92K atoms: 53.54calculating prime numbers: 11.126argon2 hashing: 6.62ffmpeg compilation: 53.38xz compression: 61.13kernel defconfig: 130.73blender render: 96.29x265 encoding: 24.99Total time (s): 506.72Total score: 70.71Name: p2dqDate: 2025-09-29-2115System: Kernel: 6.17.0-1.1-cachyos-p2dq arch: x86_64 bits: 64Desktop: KDE Plasma v: 6.4.5 Distro: CachyOSMemory: System RAM: total: 32 GiB available: 30.61 GiB used: 7.54 GiB (24.6%)Device-1: Channel-A DIMM 0 type: LPDDR5 size: 8 GiB speed: 7500 MT/sDevice-2: Channel-B DIMM 0 type: LPDDR5 size: 8 GiB speed: 7500 MT/sDevice-3: Channel-C DIMM 0 type: LPDDR5 size: 8 GiB speed: 7500 MT/sDevice-4: Channel-D DIMM 0 type: LPDDR5 size: 8 GiB speed: 7500 MT/sCPU: Info: 8-core model: AMD Ryzen 7 8845HS w/ Radeon 780M Graphics bits: 64 type: MT MCP cache: L2: 8 MiBSpeed (MHz): avg: 3366 min/max: 419/5138 cores: 1: 3366 2: 3366 3: 3366 4: 3366 5: 3366 6: 3366 7: 3366 8: 3366 9: 3366 10: 3366 11: 3366 12: 3366 13: 3366 14: 3366 15: 3366 16: 3366SCX Scheduler: p2dq_1.0.21_gf90c2aa1_dirty_x86_64_unknown_linux_gnuSCX Version: p2dq_1.0.21_gf90c2aa1_dirty_x86_64_unknown_linux_gnuVersion : 0.5.1-1HTML example of a test result comparing two different branches of the same scheduler:

- Reads all

- Example:

- Creation of a log file with name like

- To compare two or more runs, place the

.logfiles in the same directory before runningbenchmark_scraper.py. The tool will automatically detect and compare them in the HTML report.

Testing scheduler latency with schbench

Section titled “Testing scheduler latency with schbench”schbench is a scheduler benchmark designed to measure scheduler latency under a simulated server-style workload. It spawns a configurable number of “worker” and “message” threads, where messages repeatedly wake up workers. By measuring the latency distribution from wakeup to execution of these worker threads, it provides critical insight into a kernel’s ability to handle thread wakeups, balancing, and CPU contention, especially under load.

Use cases

Section titled “Use cases”You can use schbench to:

- Evaluate scheduler latency: Identify how quickly threads are scheduled after waking up.

- Compare wakeup performance between schedulers: Detect improvements or regressions in context switching and wakeup latency.

- Test the effect of kernel or scheduler patches: Assess if tuning or updates affect scheduling fairness and responsiveness.

Installation

Section titled “Installation”schbench is available in the CachyOS repositories:

sudo pacman -S schbenchRunning the benchmark

Section titled “Running the benchmark”A simple way to run schbenchfor a general latency test is:

schbench -m 2 -t 8 -r 60This example runs:

- 2 message threads (

-m 2) - 8 worker threads per message thread (

-t 8) - for 60 seconds total runtime (

-r 60)

You can adjust these values depending on your CPU core count and the desired load level.

Here is a table explaining some of the key options:

| Option | Description |

|---|---|

-C, --calibrate | Run calibration and report timing (no benchmark). |

-L, --no-locking | Disable spinlocks during CPU work (default: locking enabled). |

-m, --message-threads <n> | Number of message threads (default: 1). |

-t, --threads <n> | Worker threads per message thread (default: number of CPUs). |

-r, --runtime <sec> | Benchmark duration (default: 30). |

-F, --cache_footprint <KB> | Cache footprint size (default: 256). |

-n, --operations <count> | Number of “think time” operations to perform (default: 5). |

-A, --auto-rps | Automatically grow RPS until CPU utilization target is reached. |

-R, --rps <count> | Requests per second mode. |

-p, --pipe <bytes> | Simulate a pipe transfer test. |

-w, --warmuptime <sec> | Warm-up duration before collecting stats (default: 0). |

-i, --intervaltime <sec> | Interval for printing latencies (default: 10). |

-z, --zerotime <sec> | Interval for zeroing latency stats (default: never). |

Understanding the output

Section titled “Understanding the output”After each run, schbench prints latency percentiles like:

Output example

Wakeup Latencies percentiles (usec) runtime 10 (s) (2406 total samples) 50.0th: 60 (648 samples) 90.0th: 2034 (968 samples)* 99.0th: 4104 (211 samples) 99.9th: 10128 (22 samples) min=1, max=10308Request Latencies percentiles (usec) runtime 10 (s) (2394 total samples) 50.0th: 49216 (726 samples) 90.0th: 69760 (954 samples)* 99.0th: 166656 (212 samples) 99.9th: 273920 (21 samples) min=11770, max=334247RPS percentiles (requests) runtime 10 (s) (11 total samples) 20.0th: 234 (3 samples)* 50.0th: 238 (3 samples) 90.0th: 241 (4 samples) min=230, max=248current rps: 230.99Wakeup Latencies percentiles (usec) runtime 10 (s) (2406 total samples) 50.0th: 60 (648 samples) 90.0th: 2034 (968 samples)* 99.0th: 4104 (211 samples) 99.9th: 10128 (22 samples) min=1, max=10308Request Latencies percentiles (usec) runtime 10 (s) (2406 total samples) 50.0th: 49216 (729 samples) 90.0th: 69760 (956 samples)* 99.0th: 165632 (212 samples) 99.9th: 273920 (22 samples) min=11770, max=334247RPS percentiles (requests) runtime 10 (s) (11 total samples) 20.0th: 234 (3 samples)* 50.0th: 238 (3 samples) 90.0th: 241 (4 samples) min=230, max=248average rps: 240.60How to interpret the results

Section titled “How to interpret the results”- Wakeup Latencies:

- Measures how quickly threads wake up after being signaled.

- Lower values here (especially the 99th percentile) mean the scheduler is more responsive.

- Measures how quickly threads wake up after being signaled.

- Request Latencies:

- Represents the time taken to complete requests between threads.

- Lower latency indicates better inter-thread communication and scheduling efficiency.

- Represents the time taken to complete requests between threads.

- RPS (Requests Per Second):

- Shows the sustained throughput:

- A higher average RPS indicates the scheduler can handle more work per second under the given configuration.

- Shows the sustained throughput:

In conclusion:

- A good scheduler will show low wakeup and request latencies with consistent RPS.

- A less efficient scheduler may exhibit high latency spikes or unstable RPS values over time.

Recommendations for benchmarking games

Section titled “Recommendations for benchmarking games”If your desire is to benchmark games to compare how different schedulers perform, here are some tips to get the most accurate results:

- Use built-in benchmarks: Many modern games come with built-in benchmarking tools. These are designed to provide consistent results by running the same sequence of events each time.

- Check out this website for a list of games that include built-in benchmarks.

- Consistent settings: Ensure that the game settings (resolution, graphics quality, etc.) are the same for each test run.

- Close background applications: Other applications running in the background can affect performance. Close unnecessary programs to minimize their impact.

- If you’re not using a built-in benchmark, try to perform the same actions in the game for each test run. This could include following the same path, engaging in similar combat scenarios, or performing the same tasks.

- Even not aiming at the same spot can lead to different performance results.

- Multiple runs: Perform multiple runs of the benchmark and take the average to account for variability.

- Use performance monitoring tools: Tools like MangoHud or GOverlay can provide realtime performance metrics such as FPS, frame times, and CPU/GPU usage.

- Take advantage of keyboard shortcuts or macros:

- One example is to create a keybinding on which you can switch between different schedulers or change their modes on the fly while in-game.

- This can be done using a tool like scxctl or by creating custom scripts that change the active scheduler and its mode.

- One example is to create a keybinding on which you can switch between different schedulers or change their modes on the fly while in-game.

Uploading and sharing your benchmarks

Section titled “Uploading and sharing your benchmarks”This website contains a list of benchmarks done by the community using different schedulers or testing various settings.

In order to upload your own benchmarks. You’ll have to link your Discord account to the website and then you can submit your own benchmarks.

Then click on the New benchmark button and fill in the required information.

- You can upload multiple results for the same game using different schedulers or settings.

- Accepts both MangoHud and Afterburner logs.

- Allows searching by title or description.

Transitioning from scx.service to scx_loader: A Comprehensive Guide

Section titled “Transitioning from scx.service to scx_loader: A Comprehensive Guide”First let’s start with a close-up comparison between the scx.service file structure against the scx_loader configuration file structure.

If you previously had LAVD running with the old scx.service like this example below:

# List of scx_schedulers: scx_bpfland scx_central scx_flash scx_lavd scx_layered scx_nest scx_qmap scx_rlfifo scx_rustland scx_rusty scx_simple scx_userlandSCX_SCHEDULER=scx_lavd

# Set custom flags for the schedulerSCX_FLAGS='--performance'Then the equivalent on the scx_loader configuration file will look like:

default_sched = "scx_lavd"default_mode = "Auto"

[scheds.scx_lavd]auto_mode = ["--performance"]For more information on how to configure the scx_loader file

Follow the guide below for an easy transition from the scx systemd service to the new scx_loader utility.

-

Disabling scx.service in favor of the scx_loader.service systemctl disable --now scx.service && systemctl enable --now scx_loader.service -

Creating the configuration file for the scx_loader and adding the default structure # Micro editor is going to create a new file.sudo micro /etc/scx_loader.toml# Add the following lines:default_sched = "scx_bpfland" # Edit this line to the scheduler you want scx_loader to start at bootdefault_mode = "Auto" # Possible values: "Auto", "Gaming", "LowLatency", "PowerSave".# Press CTRL + S to save changes and CTRL + Q to exit Micro. -

Restarting the scx_loader systemctl restart scx_loader.service- You’re done, the scx_loader will now load and start the desired scheduler.

Debugging in the scx_loader

Section titled “Debugging in the scx_loader”-

Checking the service status systemctl status scx_loader.service -

Viewing all the service log entries journalctl -u scx_loader.service -

Viewing only the logs of the current session. journalctl -u scx_loader.service -b 0

In order to get a more detailed log, follow these steps.

-

Edit the service file sudo systemctl edit scx_loader.service -

Add the following line under the [Service] section Environment=RUST_LOG=trace -

Restart the service sudo systemctl restart scx_loader.service - Check the logs again for a more detailed debugging information.

Why X scheduler performs worse than the other?

Section titled “Why X scheduler performs worse than the other?”- There are many variables to consider when comparing them. For example, how do they measure a task’s weight? Do they prioritize interactive tasks over non-interactive ones? Ultimately, it depends on their design choices.

Why everyone keeps saying this X scheduler is the best for X case but it does not perform as well for me?

Section titled “Why everyone keeps saying this X scheduler is the best for X case but it does not perform as well for me?”- Like the previous answer, the choice of CPU and its design such as the core layout, how they share cache across the cores and other related factors can lead to the scheduler operating less efficiently.

- That’s why having choices is one of the highlights from the sched-ext framework, so don’t be scared to try one and see which one works best for your use case.

Examples: fps stability, maximum performance, responsiveness under intensive workloads etc.

The use cases of these schedulers are quite similar… why is that?

Section titled “The use cases of these schedulers are quite similar… why is that?”Primarily because they are multipurpose schedulers, which means they can accommodate a variety of workloads, even if they may not excel in every area.

- To determine which scheduler suits you best, there’s no better advice than to try it out for yourself.

Why am I missing a scheduler that some users are mentioning or testing in the CachyOS Discord server?

Section titled “Why am I missing a scheduler that some users are mentioning or testing in the CachyOS Discord server?”Make sure you’re using the bleeding edge version of the scx-scheds package named as scx-scheds-git

- One of the reasons will be that this scheduler is very new and is currently being tested by the users, therefore it has not yet been added to the

scx-scheds-gitpackage.

Why did the scheduler suddenly crash? Is it unstable?

Section titled “Why did the scheduler suddenly crash? Is it unstable?”- There could be a few reasons on why this happened:

- One of the most common reason is that you were using ananicy-cpp alongside the scheduler. This why we added this warning

- Another reason could be that the workload you were running exceeded the limits and capacity of the scheduler causing it to stall.

- Example of an unreasonable workload:

hackbench

- Example of an unreasonable workload:

- Or the more obvious reason, you’ve found a bug in the scheduler, if so. Please report it as an issue in their GitHub or let them know

about it in the CachyOS Discord channel

sched-ext

I have previously used the scx_loader in the Kernel Manager GUI. Do I still need to follow the transition steps?

Section titled “I have previously used the scx_loader in the Kernel Manager GUI. Do I still need to follow the transition steps?”- In this particular case, no, it is not necessary because the Kernel Manager already handles the transition process.

- Unless you have previously added custom flags in

/etc/default/scxand still want to use them.

- Unless you have previously added custom flags in

Learn More

Section titled “Learn More”- Sched_ext YT playlist

- LWN: The extensible scheduler class (February, 2023)

- arighi’s blog: Implement your own kernel CPU scheduler in Ubuntu with sched_ext (July, 2023)

- David Vernet’s talk : Kernel Recipes 2023 - sched_ext: pluggable scheduling in the Linux kernel (September, 2023)

- Changwoo’s blog: sched_ext: a BPF-extensible scheduler class (Part 1) (December, 2023)

- arighi’s blog: Getting started with sched_ext development (April, 2024)

- Changwoo’s blog: sched_ext: scheduler architecture and interfaces (Part 2) (June, 2024)

- arighi’s YT channel: scx_bpfland Linux scheduler demo: topology awareness (August, 2024)

- David Vernet’s talk: Kernel Recipes 2024 - Scheduling with superpowers: Using sched_ext to get big perf gains (September, 2024)